Earlier this year I began a project named Spatial Synthesis. Its goal was to develop a novel methodology for designing Extended Reality (XR) applications, with a particular focus on User Experience (UX). At the time I was designing a Virtual Reality (VR) application, Salaryman RESCUE!, which has now been released on Steam and itch.io, and in fact Spatial Synthesis was initially going to be a devlog for the game before I decided to expand its scope.

What constitutes good design practice in spatial computing? There is very little documentation on this currently available and I think I’m in the perfect position as an XR professional and design student to investigate this idea.

https://dystopianthunderdome.wordpress.com/2023/08/11/advanced-digital-artefact-pitch/

What started as a game development log, aimed at documenting the creative process behind XR game production, gradually shifted gears. It transformed into an insight-based exploration of designing for spatial media in general, focusing on the strategic principle of exaptation. This principle, the art of repurposing practices for novel uses, became the focus of my project, guiding my approach to XR development, and led to further insights related to spatial evolution. I found it interesting that through the use of a concept taken from evolutionary biology, I was able to gleam deeper biological insights about spatial media design.

Exaptation — a feature that performs a function but that was not produced by natural selection for its current use. Perhaps the feature was produced by natural selection for a function other than the one it currently performs and was then co-opted for its current function. For example, feathers might have originally arisen in the context of selection for insulation, and only later were they co-opted for flight. In this case, the general form of feathers is an adaptation for insulation and an exaptation for flight.

University of California, Berkeley, https://evolution.berkeley.edu/exaptations/

Though my initial vision of a vibrant Y2K-tinged website home to a detailed design bible for spatial media, and accompanied by a thriving Discord community, has encountered the realities of a demanding schedule in VR game production & full-time university, the journey has been no less enriching. Throughout this process, I’ve unearthed key insights into the application of exaptation in XR, the importance of an object-oriented UX design to prevent ‘UX whiplash,’ and the development of a unique visual language rooted in ‘future nostalgia’ and vast, open expanses. It is ironic that my initial goal of creating a novel methodology for designing spatial computing applications, which was pushed aside in favour of a shiny, branded website and Discord community, has been achieved almost accidentally along the way. For this reason, even though I don’t have that big, shiny digital artefact to point to, I am still pleased with where I am up to with this project.

This report is not just a narrative of what was achieved (and not achieved), but an honest reflection of the learning moments, the challenges navigated, and the insights gained. These experiences have not only shaped the current state of Spatial Synthesis but have also laid a foundation for my future career as an XR professional, and, I vainly hope, contributed however slightly to the wider field of designing fluently for spatial media.

Exaptation

One of my very first posts on this blog was about the deep link between ecology and technology, and I once again find myself at the crossroads between these two fields. Exaptation is a term taken from evolutionary biology and refers to a trait that evolved to serve one function being coadapted for another. The feather, which was likely selected for thermal regulation but has been exaptated to aid in flight, is the classic example.

One of my instinctual practices when coming up with fun mechanical basics in XR applications has been to find inspiration in the real world around me. This practice is certainly not new to digital media or game development (Miyamoto’s Pikmin concept was famously inspired by his gardening hobby), but the spatial link between both the real world and XR technology makes this process even more suitable. The challenge lies in making XR experiences feel intuitive – and that’s where exaptation shines. By repurposing familiar physical interactions into the digital realm, I could bypass the steep learning curves often associated with new technology. It was about blending the known with the unknown.

The repurposing of existing real-world tools and technologies into the XR environment not only provides the sense of effortless familiarity that UX designers are seeking, but also opens the door to unprecedented interactions and experiences.

https://dystopianthunderdome.wordpress.com/2023/09/15/spatial-synthesis-reflection/

Looking at any successful XR application, we can see physical practices that have been exaptated into digital success stories. Using a sword to slice musical notes in Beat Saber just feels intuitive, because most people have swung a sword before – pretend or otherwise. The key to exaptation for XR is to present an object that has a recognisable function – an object that dares the user to try something, just to see if they can – and reward the user by not only accounting for that use, but by going even further: giving it a use it could never have in the real world. This leads to moments of user delight. Good XR design is less about learning new tricks and more about applying old ones in novel ways. In Salaryman RESCUE!, a key mechanic is purchasing bottled beer from a vending machine. We designed the bottle with a twist-cap and a satisfying fizzy sound effect, because we thought people would try to open it that way. Then we thought it would be fun if you could simply smack the lid off. We didn’t force-feed this information to the user, but if any of them ever try it, they’ll be pleasantly surprised. This leads me to my next insight…

It is much better to simply understand you can’t interact with something than to try and fail. That is an immersion-breaker.

Ben Lang’s Inside XR Design series for RoadtoVR is an analogous artefact.

Spatial Evolutionary Biology and UX Whiplash

Our brains are billions of years in the making. Billions of years of evolving to traverse spatial environments. XR UX, rather than being a continuation of screen media, is a continuation of these ancient cognitive processes. When I brought this up with my professor, Travis Wall, he said it very succinctly: “touchscreen UI is a continuation of a language of interacting with machines that is fairly new (only a few hundred years old), and is a literacy because it is grounded in abstractions and symbology. Where interacting with spatial media is more related to our own physical movement in space, where we have hundreds of thousands of years of evolution and cognitive hardwiring.”

(That’s not to say there aren’t lessons to be learned from touchscreen UI. After all, flatscreen displays work in real space, so they can work in spatial media also.)

When a user tries something in XR that would work in the real world, for example grabbing a drawer handle and trying to open it, and it fails, they are immediately disappointed. There is a disjoint between the lessons they have learned via existing in space their entire lives, and what is happening in this mediated spatial experience. It immediately rips the user out of their immersion and destroys the magic, and they are quite likely to end the session. I call this effect “UX whiplash”. UX whiplash exists in legacy media, too, but the brain’s spatial evolutionary biology makes the effect exponentially more disruptive when experienced in XR.

Humans naturally think of the world as objects. As we evolved in real-world environments, we came to understand our physical experiences in terms of tangibles.

Pradipto Chakrabarty, UX Planet, https://uxplanet.org/object-oriented-user-experience-design-the-power-of-objects-first-design-approach-e65e07488a00

Towards the tail end of the project, my team and I exhibited Salaryman RESCUE! and Pond Scum at PAX 2023 in Melbourne. We set up VR headsets and observed hundreds of players playing our games. It turned out to be a treasure trove of insights, and we left PAX with pages and pages of notes. When designing XR interactions, I found I had to continuously ask myself “I wonder if I can do this?”, pretending I was a new user, rather than someone who knew exactly what I could and couldn’t do, to cover as many possible triggers of UX whiplash as possible. However, there is only so much one person can think of. At PAX, I watched as players tried to interact with things that I had never considered interacting with. We ended up making every object in the game interactable, even if they had no special uses, because of these observations. The exhibition ended up serving as a hyper-focused beta test period, but even more than this they taught me a lot about designing in XR.

Object-Oriented User Experience (OOUX), a UX term coined by Sophia Prager, was influenced by object-oriented programming, and is a model of UX design organised around objects rather than actions. While it was a novel approach for flatscreen media, it’s almost a necessity for XR apps. In implementing OOUX, I focused on creating digital objects in XR that interacted in ways users instinctively expected. The twist-cap bottle in Salaryman RESCUE! wasn’t just about the visual mimicry of a real bottle; it’s about ensuring that the action of twisting the cap off feels as satisfying and intuitive as it does in the real world. This approach extends beyond mere functionality; it’s about crafting an experience that resonates with the user’s innate understanding of object interactions.

Visual Language and Future Nostalgia

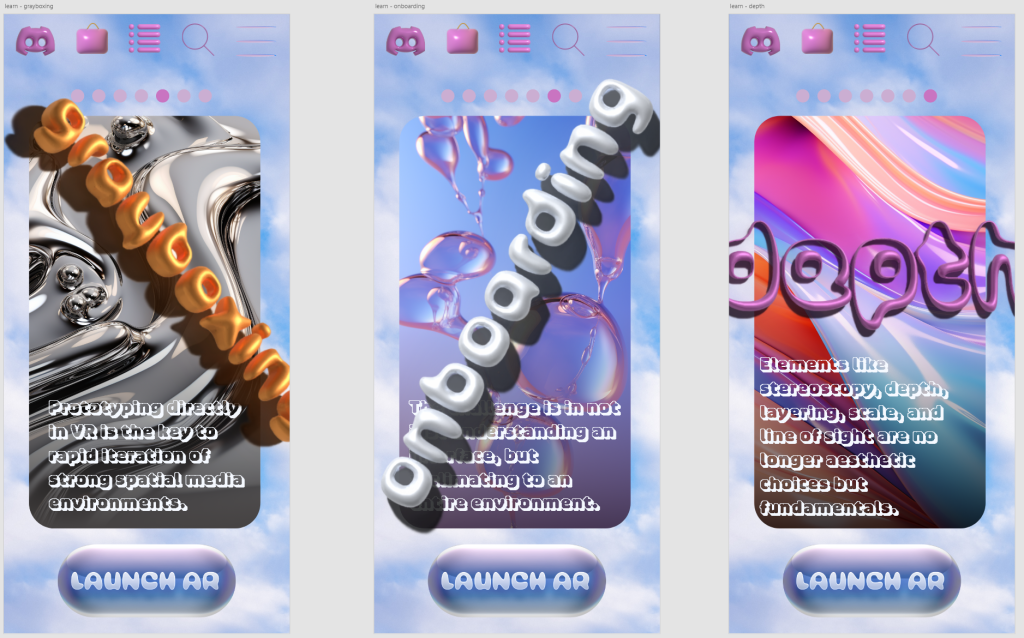

In a previous blog post, I reflected on the journey of creating a unique visual language for Spatial Synthesis as a project. The intent was for this theme to be utilised over both screen media and spatial media, and so it was quite an interesting challenge. Developed for a university UX design class, I began by considering the bubble buttons of the Y2K era, in which screen UI first utilised the illusion of depth. It seemed natural to take that illusion and turn it into a (digital) reality. By integrating these elements with the calming tones of blues, oranges, and purples found in natural skies, I aimed to create a user experience that was both exciting and comforting. This visual approach was designed as a bridge, connecting users to the new and unfamiliar world of XR through the lens of familiar and nostalgic design elements. While I never got to the fun part – designing the website in XR – I am very happy with the mobile website I designed, which utilised this aesthetic. It can be viewed here.

The challenge was finding the right design language that would resonate with the spatial depth of XR. Y2K’s gel-like bubble buttons and glossy finishes, best remembered in Apple’s Mac OS X 10.0 Cheetah, caught my eye. Although not initially designed for XR, their shape and transparency were exaptated to function beautifully as 3D objects in this new context.

https://dystopianthunderdome.wordpress.com/2023/09/15/spatial-synthesis-reflection/

Project Outcomes and Future Direction

As for the current state of Spatial Synthesis, it’s a mixed bag. The big, shiny digital artefact – the comprehensive website, the bustling community, the detailed design bible – didn’t materialise as I had initially hoped. The realities of VR game production and academic commitments took precedence. I also didn’t pursue certain elements of XR design as much as I would have liked to: namely imagineering and environmental storytelling ala the work of Don Carson. However, what I did achieve was perhaps more valuable than a questionably useful hypothetical community: a rich set of insights into XR design entrenched in biological understanding, and a unique and exciting visual language that can be repurposed for XR apps.

The question of where Spatial Synthesis goes from here is open. There’s the option to continue as initially planned, but I don’t really want to. I’d much rather take the insights I’ve gained, make the best possible spatial media experiences I can, and let those do the talking. Watch this space.

Conclusion?

This was a little ramble-y and unstructured, so I’ll do us both the favour of wrapping up the key takeaways from my time with Spatial Synthesis.

First and foremost, the application of exaptation in XR development was a game-changer. It wasn’t just about repurposing elements for new uses; it was about rethinking how we interact with technology on a fundamental level, influenced by our evolutionary biology. This approach allowed for the creation of experiences that felt intuitive and familiar, yet excitingly novel. It taught me that innovation in XR doesn’t always mean reinventing the wheel. I was much better off getting out into the world, picking up objects, and thinking, “how can I make this do something new?”

Secondly, addressing UX whiplash through an object-oriented design approach. By focusing on how users naturally interact with objects, I was able to design experiences that minimised dissatisfaction and maximised presence. This insight is something I plan to carry forward in all my XR projects. The key takeaway here is simple: in XR design, I must respect my users’ instinctual interactions with their environment.

Lastly and somewhat lamely compared to the above realisations, I made a cool visual aesthetic that works across flat and spatial media. I’m pretty proud of that, and I want to use it in a future app.

Looking ahead, I think these insights may well be foundational for my future work in XR design. They have shaped my understanding of what makes an XR experience truly engaging and user-friendly, and this project has forced me to put them into words, rather than vague feelings that I somewhat understood. Reflecting on the social utility of Spatial Synthesis, I think the project has aligned well with my career aspirations and I think that any XR designers or developers reading this may have benefited from it. While the project may not have reached all the lofty goals I had set for it, the journey itself has been invaluable, offering insights that will inform my design choices for years to come.